Semantic Partners explore Graphwise latest iteration of their Talk To Your Graph feature

At Semantic Partners we try to stay proactively on the alert for tools and approaches that can help us and our clients interact with knowledge graphs in user-friendly ways.

Talk To Your Graph (1.0) was a feature in Ontotext’s GraphDB that lets you use Retrieval Augmented Generation (RAG) to ask questions on an RDF Knowledge Graph loaded in GraphDB. The first version of this feature, however, required a graph-specific configuration from the user – one needed to specify for example what properties or classes to consider. That is one of the reasons why, when I used GraphDB to showcase GraphRAG in another article, I implemented the RAG part myself on top of GraphDB’s Similarity plugin.

Now that GraphDB is part of Graphwise, the Talk To Your Graph feature has been redesigned to be more friendly (available on GraphDB 10.8). It is a no-code showcase of various features that are available as APIs on the Graphwise platform. It is easy to configure, and in our view it has potential for building demos and for training. As a partner of Graphwise, this blog details our experience with the Graphwise Talk To Your Graph 2.0 and how it can be used in a practical use case of summarizing, classifying, and recommending employees based on a repository of skillsets and CVs.

How does it work?

Talk To Your Graph 2.0 is a feature whereby GraphDB connects to OpenAI’s ChatGPT (it is bound to its Assistants API), and lets you define an agent using a GraphDB repository that has RDF data in it. This “agent” will interact with us as a chatbot so that we can interrogate the graph using various tools, or retrievers – we recognize here the RAG pattern (see this blog of ours for a similar approach). There are 4 retrievers (“query methods”) available, and we can choose any combination of one or more of them. They all return back RDF entities and their associated information for a given query, to give context to the LLM before responding.

Retrieval methods

SPARQL query: this will enable text-to-SPARQL on the dataset, and therefore it is good for specific, closed-ended queries. Because it uses the ontology schema, you must provide the ontology. If you don’t have it in a graph, or if you can’t identify it easily by its IRI namespace, a CONSTRUCT query like the following can describe the ontology part of your data. We exclude “attribute” properties not to overwhelm the prompt - they are not needed in our use case:

PREFIX owl:<http://www.w3.org/2002/07/owl#>

PREFIX sfia:<https://rdf.sfia-online.org/9/ontology/>

PREFIX onto:<http://www.ontotext.com/>

PREFIX attributes:<https://rdf.sfia-online.org/9/attributes/>

CONSTRUCT {?s ?p ?o}

FROM onto:explicit

WHERE {

?s a ?type.

?s ?p ?o.

VALUES ?type {

owl:Class

owl:AnnotationProperty

owl:ObjectProperty

owl:DatatypeProperty

}

FILTER(!strstarts(str(?s), str(attributes:)))

}

From the code snippet, you will also notice that we limit the query to the explicit (not inferred) ontological information, for we want to avoid redundant triples in the ontology (like X rdfs:subClassOf X for any class X). These triples, when sent to the LLM, would just constitute noise in the prompt.

Full-text search: search the text inside literals - for this tool, full-text search must be enabled on the repository configuration.

Similarity (vector) search on literals: this is basically the Similarity plugin, which we showcased in this article. It finds entities related to the search and uses triples to describe them. Defining a similarity index on a given repository is just a couple of clicks on the Talk to Your Graph UI.

ChatGPT retrieval: this option uses ChatGPT to perform a vector search on textual representations of the RDF, similarly to the Similarity plugin.

The agent that we will create will have the tools we select, plus some default functions to help it know the current date, etc. Now that we have it quickly configured, let’s use it!

The data

A typical Knowledge Graph is made of an ontology acting as schema (made of classes and “properties”, that is: attributes and relations), and instance data, where individual objects are described in terms of those attributes and put in relation. The Star Wars example dataset provided in the GraphDB documentation shows how you can ask questions related to Star Wars individuals (“is Anakin a Jedi?”, “Is Tattoine a hot planet?”, “What are some hot planets?”).

This is the most immediate application of the Talk To Your Graph tool: to have a human-friendly interface to access your data. Instead of using a query language, or, instead of having a UI built, we can imagine writing (or speaking) with our graph through an LLM-enabled bot.

A practical use case using skillsets and CVs

We would like to try and use this tool to do something slightly more subtle, that is: use it to classify, or recommend, data points within or outside the graph. In the realm of technology, it could be: suggest a tool for a given use case - or suggest a vendor specialising in tools needed for my use case.

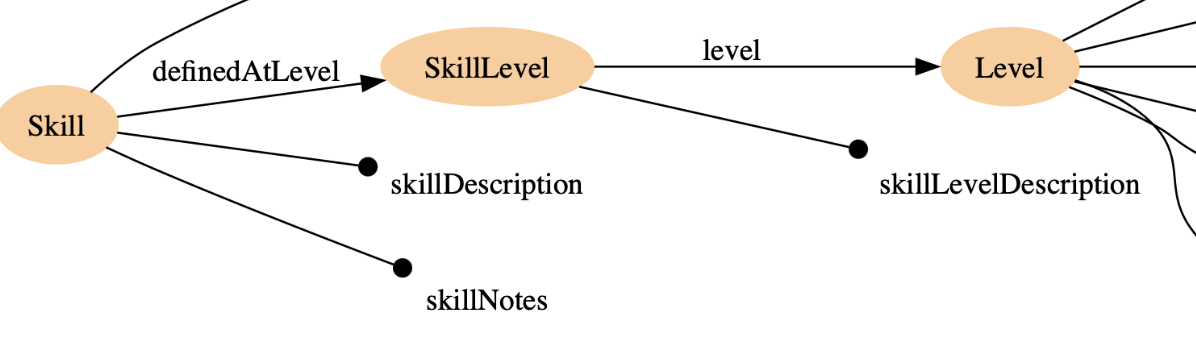

We recently designed an RDF version of the SFIA competency framework. The result is a vocabulary of skills and levels of skills, modelled in RDF, that an organisation (like us) can use to model their internal roles and competences. Skill levels in SFIA are indicated by the generic skill (for example, ADEV - “Animation development”) and a level from 1 to 7 (for example level 4 - typical of someone with the autonomy to enable themselves and others), giving rise to a skill level (ADEV level 4). SFIA provides natural language descriptions along various dimensions to describe the skills and the levels. These textual descriptions are a natural fit for a vector-enabled, LLM-enabled system to find and navigate similarities, and recommend classifications between roles and competences. In fact, we can define roles as a list of skill levels. We can also attach skills or skill levels to people directly. So we can define a dataset of roles, differentiate by some core competences/skills and the levels (junior / senior, manager / contributor, etc.).

Supposing we have used an LLM to summarise our internal anonymised CVs – we will try to use Talk To Your Graph to assign skill levels to our own people to test how well the approach can work. We can appreciate how this tool is doing the job that traditionally would have been performed by a fairly long Natural Language Processing pipeline – and all in a no-code fashion. In fact, we perform some of the steps of a document classification pipeline using natural language as an interface, guiding the retrieval and the LLM without having to actually deal with them directly.

This is the core of the model (see more on our SFIA-to-RDF Github repo):

Testing out the tool

First, get hold of a ChatGPT API token (if you are logged in to your openAI account, it should be here). Notice that you need to purchase credit for the API usage - not just access to the ChatGPT chatbot. Then, provide the key to GraphDB as an environment variable or property - the easiest way to do it if you are using a desktop instance of GraphDB is on the Settings of the main panel – the property to set is graphdb.openai.api-key:

Then, we go to Lab → Talk To Your Graph (http://localhost:7200/ttyg) and define our agent.

We have loaded our RDF dataset to a repository called sfia. We also define a Similarity index on that repository (doing that is very simple, and you can find more on that on a different blog).

If you have some experience with agentic RAG, the panel will look quite intuitive. It requires:

- A name for the agent and the repository the agent will use (sfia)

- The Query methods we want to use (we will stick to SPARQL and Similarity search)

- The model we want to use (we will use gpt-40-mini)

- “Additional instructions” to give to the prompt, that is, any hint we want to give the LLM. For example, in our case it might be sensible to have:

“Respond using only the data in the repository. The data contains definitions of competences from a competency framework. The labels are given as rdfs:label, skos:prefLabel and skos:notation”.

Clicking on “Explain settings” will show how these configurations will be rendered in the final prompt: namely, the instructions and the ontology.visuals

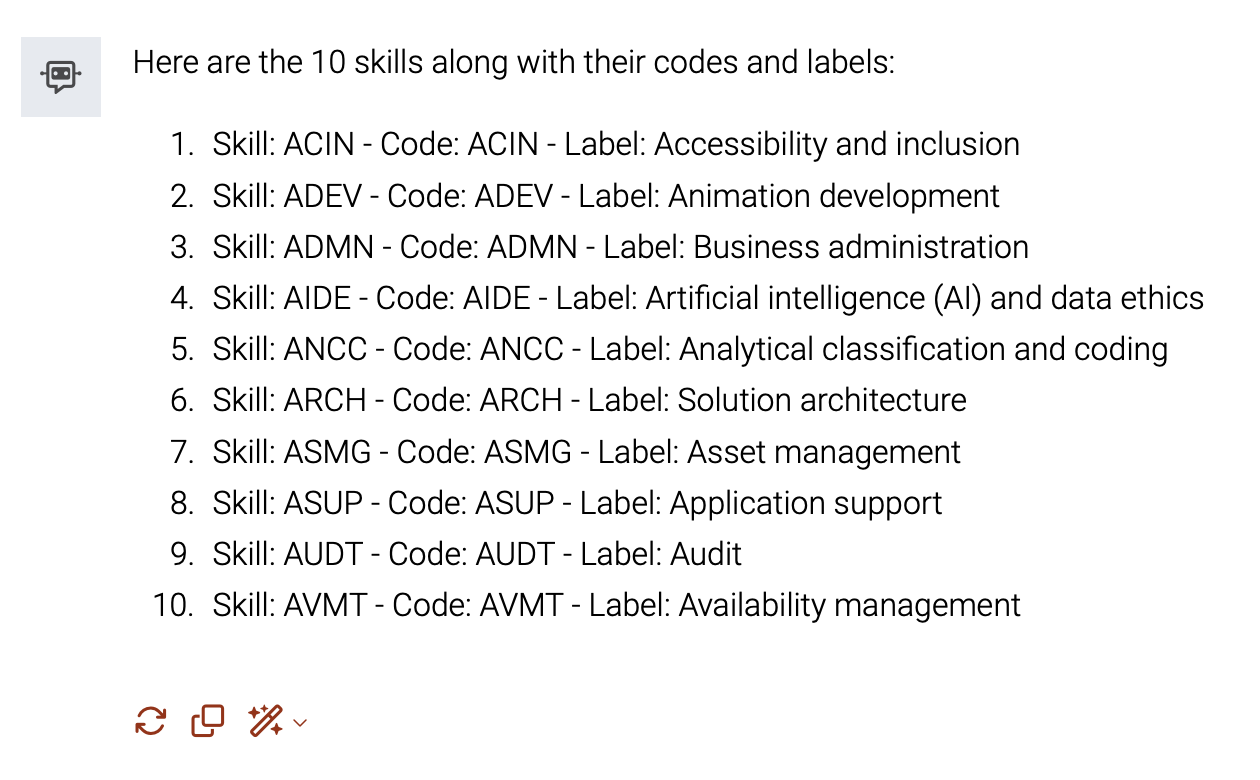

Let us try a simple query: “List 10 Skills, together with their codes and labels.”.

We now ask the agent how it got to the answer.

Good, the generated SPARQL query is correct. Notice that in some cases the SPARQL generation might not be 100% accurate, and could incur problems (syntactically correct but wrong triple patterns, or made-up IRIs), so it’s good to have that explainability button.

Let us try to ask for Skills related to Software Development.

Why is that? Well, the agent tried to interpret Software Development as a SFIA category and generated a first query that failed. It then reverted to selecting skills based on what the LLM knows to be related to software development in general. But that is not what we want. What we want from the agent is to use Similarity search here, to find skills whose descriptions are semantically close to the concept of software development. For some reason the agent did not use Similarity here, but we can simply ask it to do it.

And let us ask how it got there with explainability:

Great! It used similarity search: under the hood, with a SPARQL query, but it is using the Similarity index (and then it uses DESCRIBE to get triples about every entity in the graph matching the vector search).

As we can see, the agent could behave better at selecting what tool to use. As the TalkToYourGraph documentation mentions, the SPARQL generation tool lets you make structured queries using natural language. It is better for closed-ended questions - those going straight to answer a node property. It also permits you to make aggregate queries (like “count the nodes such and such”). Instead, the Similarity (vector-based) search approach is more versatile when considering questions that require to catch nodes by semantic relatedness (e.g., finding a node labelled “software” by writing “computer”). If the use case permits it, we could consider giving the agent only the Similarity plugin, which is flexible and it works well with questions like mine, where it is important to interpret concepts in an “open” way.

Let us see if we can replicate the approach we used in this article. There, we developed a demo solution on top of the Similarity plugin. The solution, even if just a demo, was crafted to work on the particular use case of CV matching, so it was a combination of graph retrieval and documents’ retrieval. It could be more sophisticated because it was built to fit that use case. With Talk To Your Graph, that is a more general interface to interrogate a graph, we could not quite get it to work at the same level, but we can show how it can still showcase the same ideas.

Let us grab a short description of a professional (I will take mine) and I will ask which SFIA skills can be traced in the description.

As we mentioned, we have pre-loaded a small set of resume summaries in the RDF dataset, so I can guide the agent to consider my resume, find relevant skills in it, and tell me what skill level the resume can cover.

In this anonymised dataset, I am Mat Chisel!

(Oops, I made a typo in my fake name, but the tool still found the correct information)

Very good! Hard to tell if these skills are the best two, but this depends on the similarity index ranking, it is a general problem of any such solutions, and can be dealt with on its own. Let us push a bit more, and let us ask what Skill levels for these two skills can be traced in this resume.

So, the response is: level 5 or 6 for Specialist advice and 4 or 5 for Data Engineering, which is reasonable, especially considering the limited amount of information given by the summarised resume. We can imagine how a human-in-the-loop would use these suggestions in accordance with their own policies (more or less safe bets etc.).

Considerations

Talk To Your Graph 2.0 is a no-code tool to configure an agent to explore use cases over your Knowledge Graph. To operationalise the same idea for production, check out https://www.ontotext.com/blog/talk-to-your-graph-client, and the accompanying repository https://github.com/Ontotext-AD/ttyg-client, which shows in detail how to customise the idea programmatically.

We demonstrated that it is also possible to showcase how to answer questions that are not directly mapped to a structured query, but that use the power of similarity (vector) search. It is not for the casual user - used naively, it can fail easily. But we managed to pull off decent responses with minimal effort. It is a user-friendly showcase of features that Graphwise as a platform provides also as libraries and APIs, and that in a real solution would be crafted in a bespoke fashion.

It is indicative of the innovative and forward thinking we've come to expect of Ontotext, and delighted to see this carried forward in Graphwise.

If our examples teased your curiosity towards real applications, come talk to us and Graphwise.